|

David's update This week, I have been working on importing, reading, and translating of the various digital map layers

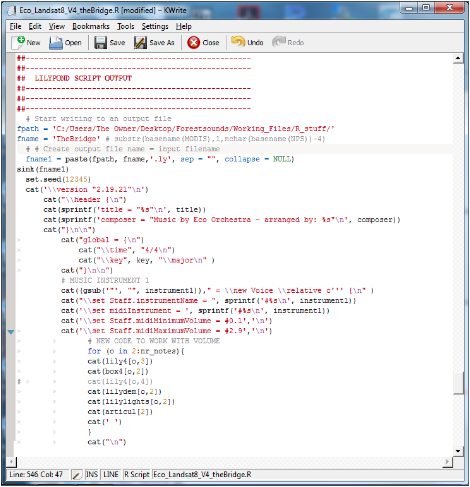

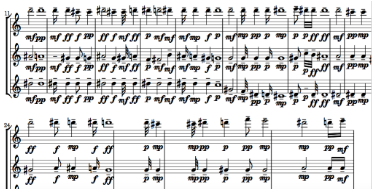

I wanted to give Christina a short sample of the music. It isn’t the final, but it captures the general structure that Christina and I have been chatting about; tone, volume, and rhythm. The stream flow data (previous pos) was imported into R an then converted to map coordinates. Those coordinates are then used to identify specific pixels on each of the other datasets (spectral, elevation). The value of those pixels are then translated to tone (MODIS), volume (Night Lights), and rhythm (DEM). The translated value is dervied by finding the value of each pixel in a separate look-up-table (LUT) and replaced with that value. I can manipulate the LUT to change each of the musical attributes. However, before that information gets passed along to LilyPond, the translated notes need to written in the language that LilyPond can understand. Here is a segment of that computer code that outputs a .ly file that can be read in LilyPond. First, I identify the file and where to save it. Next all of the translated data gets concatenated for each line. So for example, if I had pixel values for each of the map layers... MODISnir = 40 DEM slope = 100 Night Light = 5 I would end up playing a middle “C” quarter note played at mezzo piano. To play this in LilyPond, the text would read, C4\mp . Combining all of the values that were translated from digital map data the program can write sheet music and export to midi. Here you can see an example of the sheet music. I still have some bugs to fix in separate the individual measures out. LilyPond was made so that you could hand type your music, not automatically translate data. So I still need to have a feedback that checks the time signature with the amount of notes played. However, that does not interfere with the actual sound file.

Here is a short draft sample of music translated over the Susquehanna. For the sample, I reduced the length of the entire song, by skipping over a few pixels for each note. The next steps will be to revise and clean up the code and the LUTs.

0 Comments

Leave a Reply. |

Visit our other residency group's blogs HERE

David Lagomasino is an award-winning research scientist in Biospheric Sciences at the NASA Goddard Space Flight Center in Maryland, and co-founder of EcoOrchestra.

Christina Catanese is a New Jersey-based environmental scientist, modern dancer, and director of Environmental Art at Schuylkill Center for Environmental Education.

|