|

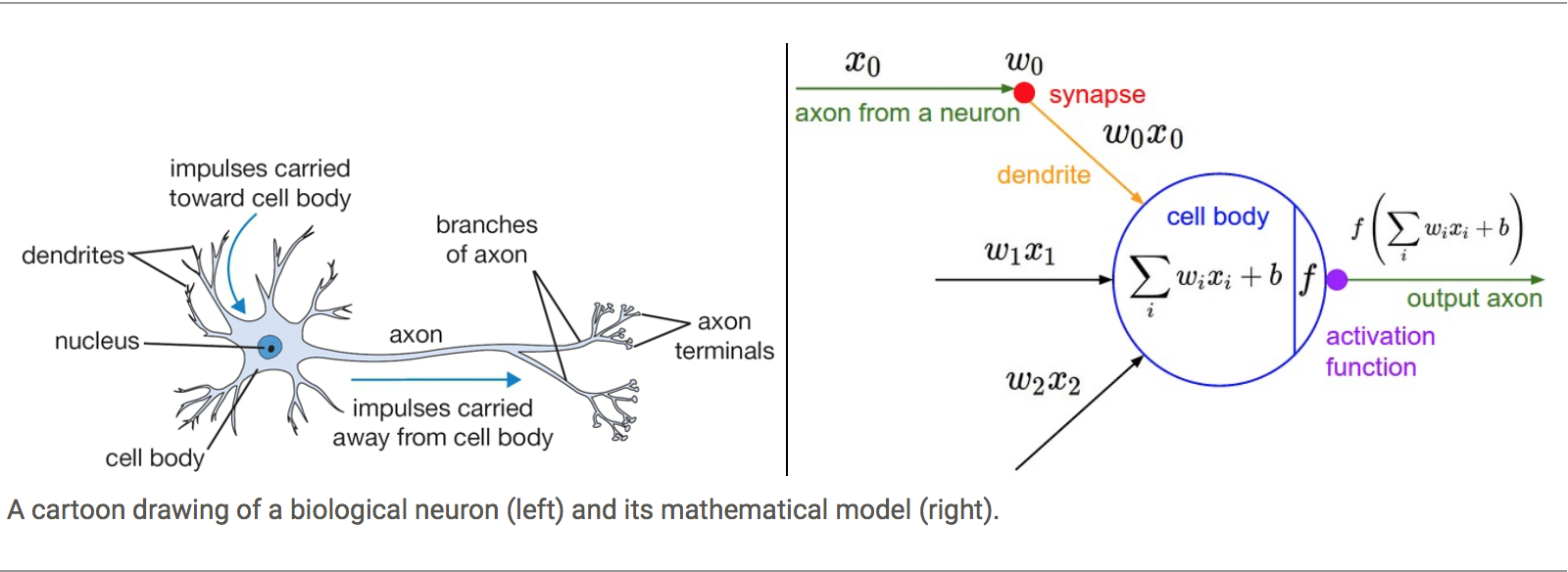

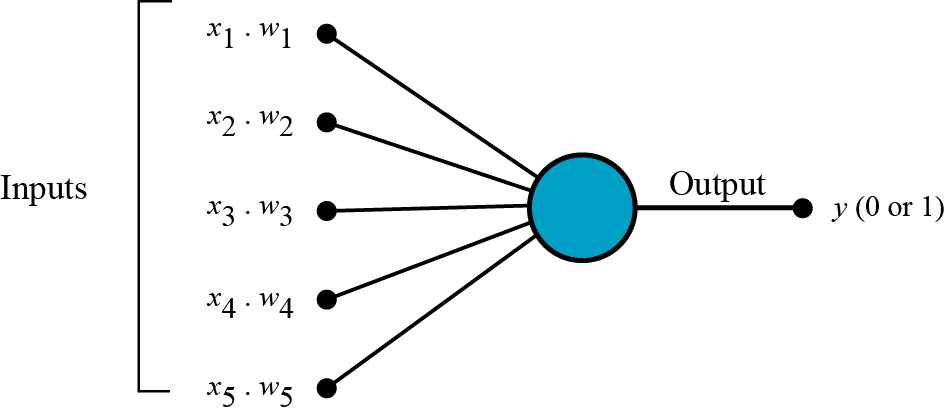

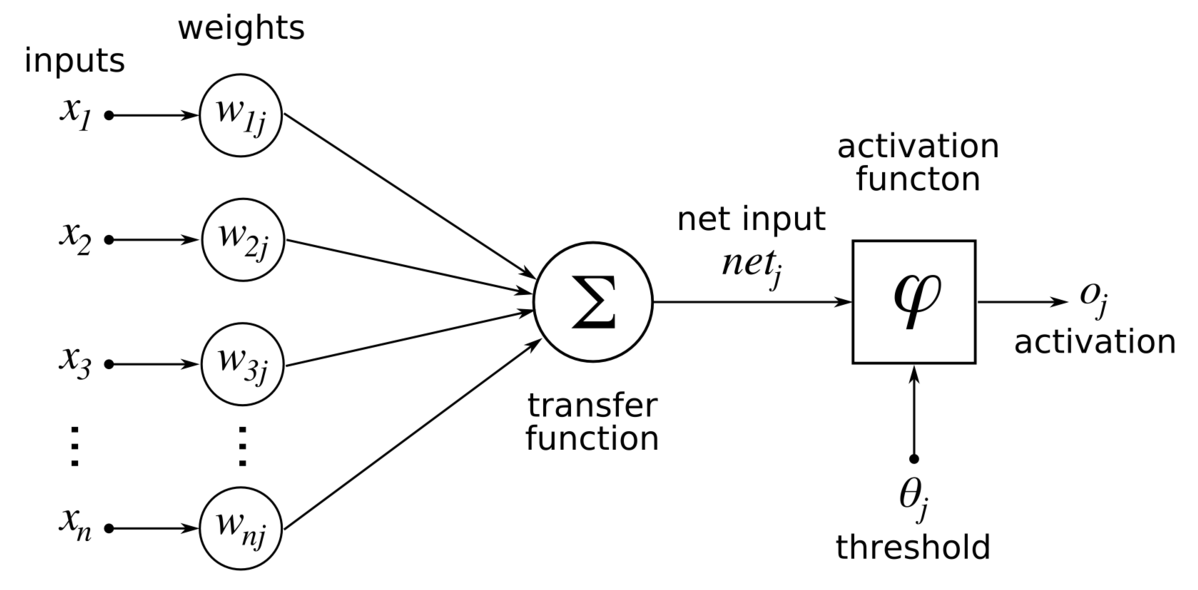

Diaa If A, B, C, and D, are four pieces of information, they have been stored in a human memory “M” When A, B, C, and D, pass (stored and then recall) through “M” they will be Am, Bm, Cm, and Dm IR: Internal revising processes (revising information by passing it through the existing related information in our memory. ER: External revising processes (revising information by passing it through the new acquired related information in our memory. IT: Internal time (time spent since the new information has been stored in our memory, or Internal revising time) ET: External time (time spent since the related acquired information has been gained) RP: recalling processes (procedures that responsible for evoke the stored information under a particular circumstances). So, A ≠ Am B ≠ Bm C ≠ Cm D ≠ Dm But, Am= A+ IR + ER⟶ through (IT & ET) Bm= B+ IR + ER⟶ through (IT & ET) Cm= C+ IR + ER⟶ through (IT & ET) Dm= D+ IR + ER⟶ through (IT & ET) The information is processed neurally in our brain, where Axon holds impulses carried away from cell body to Axon terminals. But, however, the differences between daily neural processes of information and information of memory is in Time! in which information keep still in our brain but not as it was in its original state. We usually reject several information and arguments in our daily life, but after a while some of them may be accepted in our brain. Such processes -in which a particular argument gained a level of justification- have been executed (consciously or unconsciously) during the internal and external revising processes inside our memory and vitally affected by the time spent in these processes. Within this context the role of memory in generating knowledge is vital! Artificial Neural networks (ANN) can simulate the normal information processes by modulating biological neuron mathematically. In its basic level, the mathematical model of the biological neuron can be algorithmically implemented by raising the concept of perceptron, like this: One of the key elements of a neural network is its ability to learn. A neural network is not just a complex system, but a complex adaptive system, meaning it can change its internal structure based on the information flowing through it. Typically, this is achieved through the adjusting of weights. In the diagram below (fig. 3), each line represents a connection between two neurons and indicates the pathway for the flow of information. Each connection has a weight, a number that controls the signal between the two neurons. If the network generates a “good” output (which we’ll define later), there is no need to adjust the weights. However, if the network generates a “poor” output—an error, so to speak—then the system adapts, altering the weights in order to improve subsequent results. Within this context there are two keywords to be considered:

1- Weight: Techopedia explains Weight as follows: In an artificial neuron, a collection of weighted inputs is the vehicle through which the neuron engages in an activation function and produces a decision (either firing or not firing). Typical artificial neural networks have various layers including an input layer, hidden layers and an output layer. At each layer, the individual neuron is taking in these inputs and weighting them accordingly. This simulates the biological activity of individual neurons, sending signals with a given synaptic weight from the axon of a neuron to the dendrites of another neuron. 2- Activation function: Activation function is a function used to transform the activation level of a unit (neuron) into an output signal. Typically, activation function has a “squashing” effect.

Coming back to our first assumption, via adding time coefficient to weights of neural units and activation functions, investigating the positive/negative effect of time on the justification rate achieved through memorization mechanism would be reflect the role of our memories in generating or revising knowledge.

2 Comments

11/24/2018 02:15:44 pm

I love this mathematical model of memory and thought. Yana and I have been exploring neural networks and function as it relates to scientific and artistic work. In one of my recent SciArt residency blog posts, I talked about scientific models as a way of expressing highly abstract function/ concepts. I would like to also refer to your model if that is ok.

Reply

Leave a Reply. |